Interactive Performance for Movies and Video Games

This paper introduces a straightforward technique for combining

performance-based animation with a physical model in order to support

complex interactions with objects in a real-time animated world. The

core of the approach builds off of a conceptual framework which unifies

kinematic playback of motion capture and dynamic motion synthesis. The

proposed method integrates a real-time recording, which allows a human

actor to provide an online performance, with dynamics-based control, in

order to modify the motion data based on the physical conditions felt

by the character – including external influences and the effects of

balance. The system smoothly unifies the kinematic and dynamics systems

by changing the influence of each in a semi-automatic fashion, both

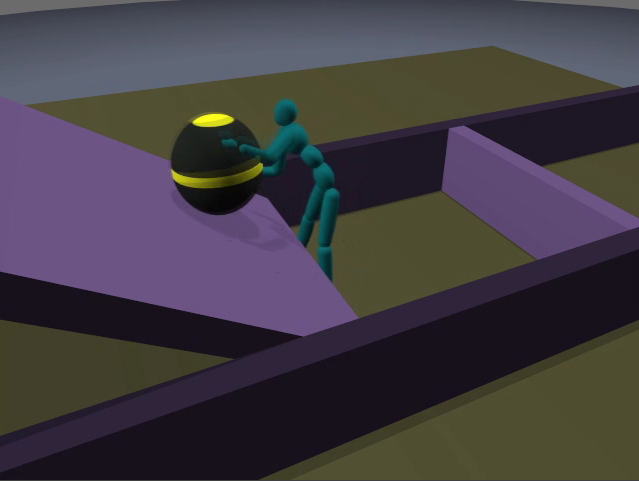

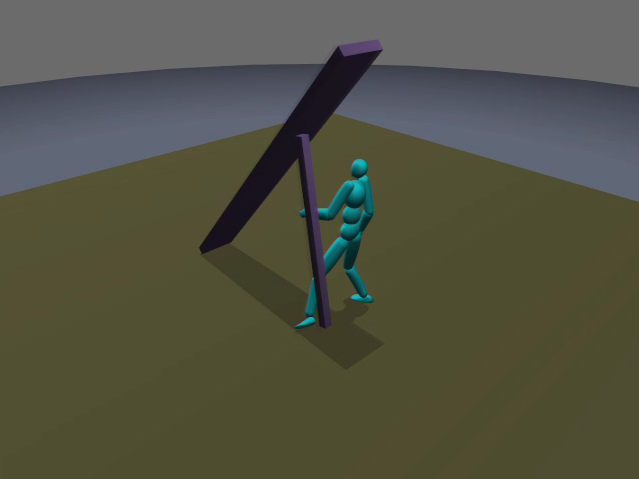

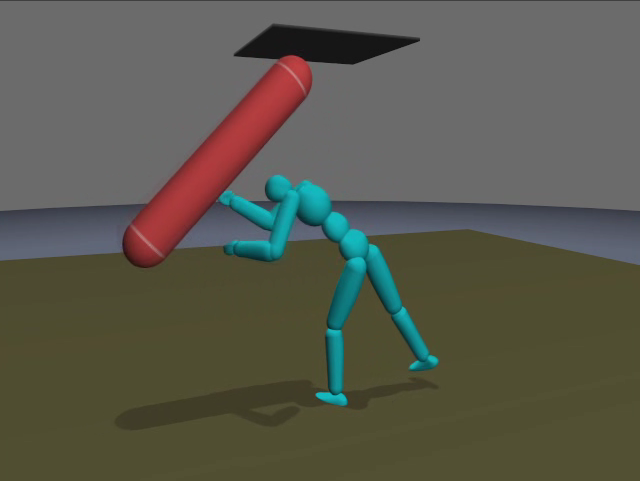

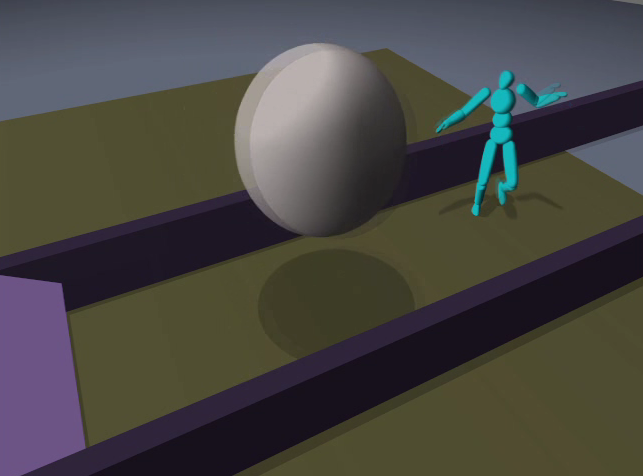

spatially within the body and temporally. Examples of rich interactions

interleaved with intelligent response highlights power of the

technique. The current system is intended for offline motion synthesis

of rich, virtual interactions with real-time, reactive human-driven

performance – although ultimately the general approach is likely to be

beneficial for online applications such as electronic games.

Special thanks to Roby Atadero,

Steve Suh, Muzaffer "Muzo" Akbay, and Victor Zordan.

[Thesis Document]:

- pdf [2.6MB]

[Videos]:

- submission video

[33.5MB]

- live capture [36.9MB]